By Richie McColl

Introduction

The world of Artificial intelligence evolves fast. It seems like there are new applications released every day — it’s hard to keep up.

As AI models get better at understanding code, we decided to start a small research project at NearForm. The goal was to investigate the potential for AI to improve the code review process. Most popular applications are about the AI itself producing code, such as GitHub’s Copilot. Our focus for this project was to use AI to provide useful suggestions on human-written code.

This post explores the intersection of AI and code review. We’ll see what our prototype looks like, how it works and what we learned.

Prerequisites

As far as stacks and dependencies go, it is pretty straightforward. We’re using a GitHub App, which is a service offered by GitHub that helps us automate workflows. If you would like to read more, check out the documentation here.

The most popular framework for building these applications in JavaScript is Probot. We decided to use that on Node.js v18.

The code used for this prototype is available on this repository.

What we built

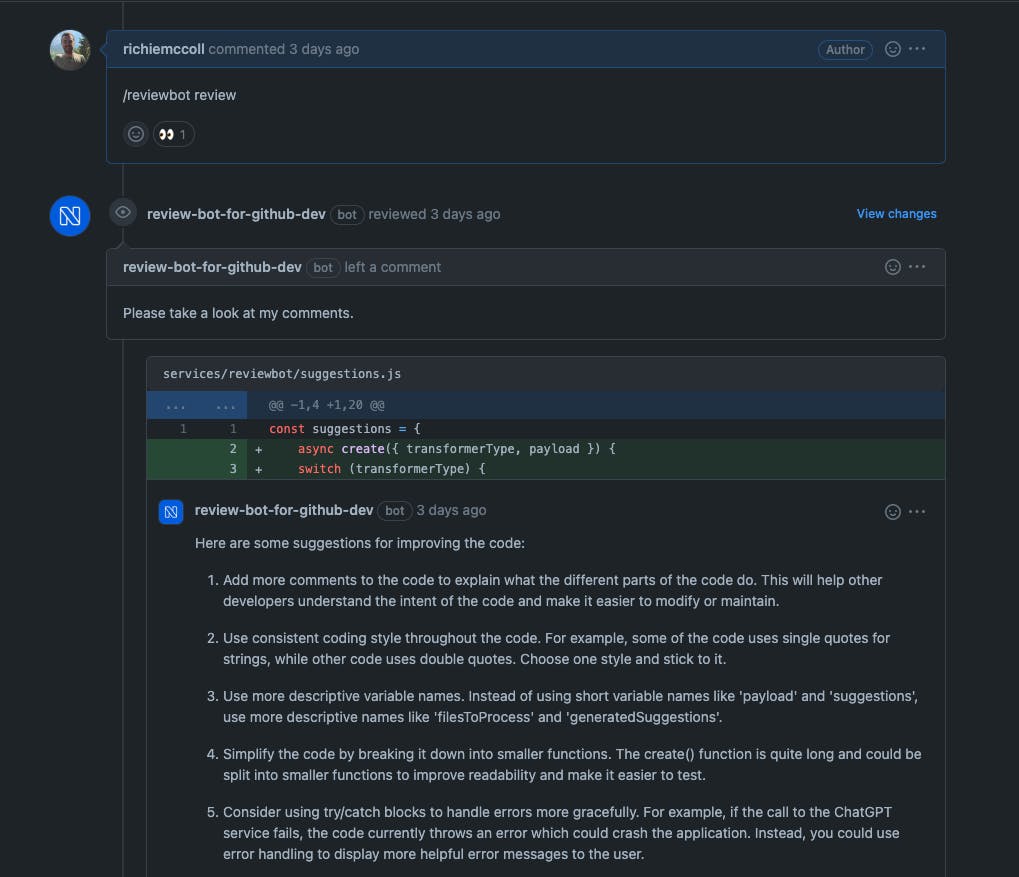

Let’s start with the user perspective and what a typical user flow might look like:

Developer installs the

reviewbotGitHub AppDeveloper creates a pull request on GitHub

Developer types

/reviewbot reviewas a GitHub commentreviewbotGitHub App creates a new review of the pull request, which contains comments as suggestions

How it works

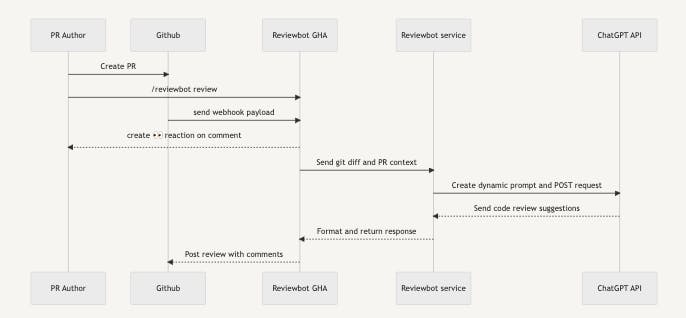

So let’s break down the components that make up this workflow. Here’s a high-level sequence diagram with the set of actors that are involved in the process:

Receiving the webhook

The first part of the flow is to get the payload from GitHub to our Probot application.

The recommendation is to use Smee.io for the webhook payload delivery. An alternative that we settled on was to use Ngrok. The combination of webhook forwarding and GitHub App gives us the first major part of this diagram.

To set up a listener and handler for receiving the webhook payload from GitHub, it’s as simple as the following snippet:

app.on(["issues.issue_comment",], async (context) => {

// Do something with context...

});

Creating review suggestions

Now onto the more interesting part of the project. We decided to go with the OpenAI ChatGPT service for this prototype. They have a couple of different APIs that we explored:

Both of these offer a variety of different models. It was interesting to explore and see what the differences and subtleties were between them. Some of the models that we explored were:

gpt-3.5-turbo

text-davinci-003

text-davinci-002

OpenAI recommend the gpt-3.5-turbo model because it is more refined than the older ones but, more importantly, it’s cheaper.

The main difference in implementation between the two APIs was formatting the prompt on the payload that we send.

// Completions API

{

model: "text-davinci-003",

prompt: "How would you improve this code snippet?",

...others

}

// Chat Completions API

{

model: "gpt-3.5-turbo",

messages: [{role: "user", content: "How would you improve this code snippet?"}],

}

At the time of writing this, we’re currently waiting for API access to the newest model — GPT-4. We’ve been using it for various tasks in the OpenAI playground. It seems to be much more accurate with some of the responses.

Prompt Engineering

One of the more interesting aspects of this project was exploring the concept of Prompt Engineering. It’s a relatively new term that has started to pop up alongside all the AI and Large Language Model (LLM) hype. We’ve written more about generating prompts in our Writing Code or Writing Prompts? Solving a Problem With GPT-3 blog post.

The basic idea is that you tweak the content of the question that you send to the AI model so that it will give you better results. To me, it’s like a more advanced form of ‘Google-fu’ — a term that refers to how good someone is at using Google search to find what they want on the internet.

When it comes to natural language generation, there are a variety of different tasks that require someone to ‘engineer’ a prompt. These include:

Text Summarisation

Information Extraction

Question Answering

Code Generation

The prompt we settled on, after many iterations, was a combination of Question Answering and Code Generation. This seemed to get the best results out of the ChatGPT API for the context of this project. We built a PromptEngine service that would parse the git diff and combine it with the prompt dynamically. This is what we then format into the payload to send to the ChatGPT API.

Creating the review

A typical probot app also comes with JavaScript helpers for when we need to interact with the GitHub API. These come via the @oktokit/rest package.

Another useful feature of GitHub Apps is that they are first-class actors within GitHub. That means the app can act on its own behalf, taking actions via the API directly using its own identity.

So, after we got the suggestions from the ChatGPT API, the last thing to do was to format the response into a payload that the GitHub API can understand. With that part done, the full sequence flow from earlier was finished.

What did we learn?

For a long time, building AI-powered tools was a novel concept. It was a technique hidden behind libraries and frameworks that the average engineer would never use, such as Tensorflow.

That idea has been completely flipped on its head with the latest generation of tools. It’s now easier than ever to start integrating these models into applications, which explains why we’re seeing so much hype in this space at the moment.

Alternatives to OpenAI

OpenAI and the ChatGPT suite of models are only a fraction of the options out there. We’ve done some exploration on alternatives to understand what is available.

Huggingface is a good alternative. It’s another leading AI research organization and technology company focused on the development and application of natural language processing (NLP) models and tools. Huggingface has a variety of different services that we might explore in future iterations of this project, for instance:

Transformers Library: open-source library with state-of-the-art NLP models

Model Hub: pre-trained models contributed by the community

Inference API: service that enables developers to access NLP models

Challenges

There are three core, high-level risk areas that we noticed when building this project. We think this is where many of the problems lie when building an AI-powered tool. These are the three risk areas:

Context

Using ChatGPT for a task like this proved that it can be difficult to get relevant results. This is due to a limited understanding of the codebase context. At times, the model was unable to understand the context and intent behind certain snippets. The end result was usually irrelevant or incorrect recommendations.

Security and Privacy

There’s no storage implemented in this prototype. It’s only moving data back and forth between GitHub and OpenAI, but there appear to be tricky areas around ensuring the confidentiality of private code. This is especially true when relying on third-party AI tools and platforms.

Model Bias

Bias is a big problem with AI models. These models might learn and spread biases that were present in the training data, which can lead to biased recommendations or skewed interpretations of code snippets. We don’t know what bias ChatGPT may have, because we don’t know exactly what code training data it was provided with.

Conclusion

AI-powered code reviews might change how we work in the software industry. It certainly has the potential to make the whole process more efficient and accurate.

As machine learning algorithms improve, we should expect that these models will be able to understand complex code, detect subtle bugs and provide relevant suggestions for improvement. This will not only save time for developers but also help reduce the number of post-merge issues.

I think it is interesting to explore what future platforms with AI-enhanced collaboration might look like. Also, how we can use AI to collaborate, learn and build the next generation of software tools.