Save time and increase reliability with customisable blueprint unit test templates for Helm charts

By Iheanyi Onwubiko

DevOps engineers and developers, discover how you can reduce testing time and improve consistency across your projects

In our OPA policy-based testing of Helm charts blog post, we explored how to enforce standardised rules and best practices in Kubernetes deployments. While a policy-based testing approach significantly enhances security and compliance in Kubernetes environments, this article shifts the spotlight to unit testing for Helm charts.

Unit testing complements policy-based testing by verifying the functional correctness of individual components within Helm charts. Unlike policy testing, which ensures adherence to predefined rules, unit testing validates that each part of a chart behaves as expected under various conditions.

This blog post introduces a solution designed to speed up testing and boost reliability using standardised, customisable blueprint templates that adapt to any chart.

Leveraging the Helm unit test framework

We leveraged the Helm unit test framework as the base of the test templates. This tool allows developers to write unit tests for Helm in YAML, a familiar format within the Kubernetes world. The framework simplifies testing by checking if the rendered YAML output from a chart matches the expected results. For example, a simple test to check if a deployment exists might look like this:

suite: test deployment existence

templates:

- deployment.yaml

tests:

- it: should create a deployment

asserts:

- hasKind:

of: Deployment

This example test ensures that the chart generates a Kubernetes deployment resource. Let’s explore a more comprehensive example that showcases various testing techniques.

suite: test comprehensive deployment configuration

templates:

- deployment.yaml

tests:

- it: should be of correct kind, apiVersion, and count

asserts:

- hasDocuments:

count: 1

- isKind:

of: Deployment

- isAPIVersion:

of: apps/v1

- it: should create a deployment with correct specifications and metadata

set:

replicaCount: 3

image:

repository: nginx

tag: 1.19.0

release:

name: production

asserts:

- equal:

path: spec.replicas

value: 3

- equal:

path: spec.template.spec.containers[0].image

value: nginx:1.19.0

- equal:

path: metadata.name

value: production-myapp

- matchRegex:

path: metadata.name

pattern: ^production-.*$

- isNotEmpty:

path: spec.template.spec.containers[0].resources

- contains:

path: spec.template.spec.containers[0].ports

content:

containerPort: 80

- isNotNullOrEmpty:

path: metadata.labels

- equal:

path: metadata.labels.app

value: myapp

- it: should set resource limits

asserts:

- equal:

path: spec.template.spec.containers[0].resources.limits.cpu

value: 500m

- equal:

path: spec.template.spec.containers[0].resources.limits.memory

value: 512Mi

- it: should have appropriate security context

asserts:

- equal:

path: spec.template.spec.securityContext.runAsNonRoot

value: true

- isNull:

path: spec.template.spec.containers[0].securityContext.privileged

- it: should configure liveness probe correctly

asserts:

- isNotNull:

path: spec.template.spec.containers[0].livenessProbe

- equal:

path: spec.template.spec.containers[0].livenessProbe.httpGet.path

value: /healthz

This comprehensive test suite demonstrates a wide range of Helm unit testing capabilities:

Basic structure validation:

- Checks for the correct kind of action (deployment), API version (apps/v1) and document count.

Deployment specifications:

Uses “set” key to override chart values for testing specific scenarios.

Verifies replica count, image details and naming conventions.

Checks for the presence of resource specifications and correct port configuration.

Metadata and labelling:

Ensures the correct naming convention (combining release name and app name) is used.

Verifies the presence and content of labels.

Resource management:

- Checks for specific CPU and memory limits, crucial for resource allocation in Kubernetes.

Security configurations:

- Validates security context settings, ensuring the container runs as a non-root user and isn't privileged.

Health checks:

- Verifies the presence and configuration of a liveness probe.

You can find more information on how to define tests here.

While this test suite is suitable for a specific deployment, it is tightly coupled to the “myapp” application and the “production” release. How can we efficiently reuse this test or a set of test suites across various charts without duplicating effort? To make this more versatile, we could introduce placeholders like {{ CHART_NAME }}, {{ RELEASE_NAME }}, {{ CPU_LIMIT }}, {{ HEALTH_CHECK_PATH }}, {{ REPLICA_COUNT }} etc., to make this specific test suite into a reusable blueprint that can be easily adapted to different charts and configurations.

Blueprint unit test templates

A “templated” Helm test suite allows a test to be reused with different charts, aligning with the best practices of modularity and reusability. In other words, we consume blueprint, standardised, pre-written test suites that can be used to suit any project’s needs. For instance, if we have a test that needs to verify the chart name, release name, deployment count and common metadata, the template includes the checks and can be adjusted per project requirements.

suite: test {{ CHART_NAME }} deployment

templates:

- deployment.yaml

tests:

- it: should be of correct kind, apiVersion, and count

asserts:

- hasDocuments:

count: {{ DEPLOYMENT_COUNT }}

- isKind:

of: Deployment

- isAPIVersion:

of: apps/v1

- it: should have common metadata

release:

name: {{ RELEASE_NAME }}

asserts:

- equal:

path: metadata.name

value: {{ RELEASE_NAME }}-{{ CHART_NAME }}

- isNotNullOrEmpty:

path: metadata.labels

- it: should set resource limits

asserts:

- equal:

path: spec.template.spec.containers[0].resources.limits.cpu

value: {{ CPU_LIMIT }}

- equal:

path: spec.template.spec.containers[0].resources.limits.memory

value: {{ MEMORY_LIMIT }}

The above test suite can now serve as a versatile blueprint unit test template, which DevOps practitioners can then fine-tune based on their chart’s specific set-up. This approach enables uniform and streamlined testing across diverse Helm charts, guaranteeing that each deployment fulfils the necessary criteria while reducing the labour required to develop and upkeep test suites.

To further streamline this process and fully leverage the benefits of this method, we wrote a custom Python script that converts the blueprints into customised test suites for each unique chart.

Processing the blueprints

To automate the creation of the final unit tests, we use a Python script that processes the blueprint test suites. This script replaces placeholders in the test suites with actual values from a configuration file. This step transforms our generic blueprints into chart-specific test suites. To demonstrate how to use this solution, let’s walk through a practical scenario using the blueprint deployment and ConfigMap test suites. Reference the sample code in the public repository here to follow along.

Preparing the environment

Before we begin, we need to have the following prerequisites installed:

Helm > v3.x

Python > 3.10

Git

Local Kubernetes cluster: Although not strictly required for testing purposes, setting up a local Kubernetes cluster with tools like minikube is advisable. This enables validation of the Helm charts in a Kubernetes environment.

You can verify installations by running:

helm version

Python --version

git --version

Clone the repository:

git clone https://github.com/nearform/helm-unit-test-templates/

cd helm-unit-test-templates/sample-code

This is the folder structure:

sample-code/

├── README.md

├── mars/

│ ├── Chart.yaml

│ ├── templates/

│ ├── tests/

│ │ ├── deployment_test.yml

│ │ ├── configmap_test.yml

│ │ └── ...

│ └── values.yaml

├── notes_test.yml

├── renderer.py

├── run_tests.sh

└── values.json

Mars is the Helm chart we will use for the demo. The test suite templates are located in the mars/tests/ folder. We’ll focus on deployment_test.yml and configmap_test.yml to illustrate how these test suite templates work. Let’s take a look at the configmap_test.yml file:

# yaml-language-server: $schema=https://raw.githubusercontent.com/helm-unittest/helm-unittest/main/schema/helm-testsuite.json

suite: test {{ CHART_NAME }} configmap

templates:

- configmap.yaml

tests:

- it: should be of correct kind, apiVersion, and count

asserts:

- hasDocuments:

count: {{ CONFIGMAP_COUNT | default(1) }}

- isKind:

of: ConfigMap

- isAPIVersion:

of: v1

- it: should have common labels

asserts:

- isNotNullOrEmpty:

path: metadata.labels

- it: should have correct name (standard)

documentIndex: 0

release:

name: {{ RELEASE_NAME }}

asserts:

- equal:

path: metadata.name

value: {{ RELEASE_NAME }}-{{ CHART_NAME }}

- it: should not have Helm hook (standard)

documentIndex: 0

asserts:

- notExists:

path: metadata.annotations

- it: should contain expected environment variables

asserts:

- equal:

path: data

value:

{{ CONFIGMAP_DATA | to_yaml | indent(12) }}

To customise the tests for a specific chart, we’ll need to modify the sample-code/values.json file. This file provides the actual values for the placeholders in the test templates. Let’s update this file with appropriate values for our Helm chart. You’ll notice that placeholders will be replaced when the tests are rendered.

{

"CHART_NAME": "mars",

"RELEASE_NAME": "foobar",

"CONFIGMAP_COUNT": 1,

"DEPLOYMENT_COUNT": 2,

"CONFIGMAP_DATA": {

"TZ": "UTC",

"JOHN": "DOE"

}

}

With the values.json file configured, we’re ready to initiate the testing process. To do this, we’ll invoke the run_tests.sh script, which orchestrates the entire testing workflow as follows:

./run_tests.sh path/to/chart values.json

This command invokes the script with two arguments: the path to the chart we are testing (sample-code/mars) and the values.json file we just modified i.e ./run_tests.sh mars values.json.

Behind the scenes, the script sets in motion a series of operations:

It calls

renderer.py, a Python script that acts as the engine for our testing framework.The Python script performs several key tasks:

a. It generates a temporary directory named <chart-name>_rendered, which serves as a “sandbox” for our testing.

b. The script then duplicates the contents of the original chart into this new directory.

c. Next, it scans the test folder within mars_rendered for the templated YAML test suites

d. For each discovered test file,

renderer.pyit applies the Jinja2 templating engine, substituting the placeholders we discussed earlier with actual values from the values.json file.e. The resulting fully formed test files are then saved back into the mars_rendered/tests directory.

With the tests now prepared,

run-tests.shinvokes the helm unit test command directing it to evaluate the chart in the “sandbox” directory.As the tests execute, you’ll see real-time feedback in the terminal, highlighting any successes or failures.

Upon completion of the tests, the script makes a decision:

a. If all tests pass successfully, it tidies things up by removing the temporary mars_rendered directory.

b. However, if any tests fail, the script preserves the mars_rendered directory, allowing for inspection of the generated files and diagnosis of any issues.

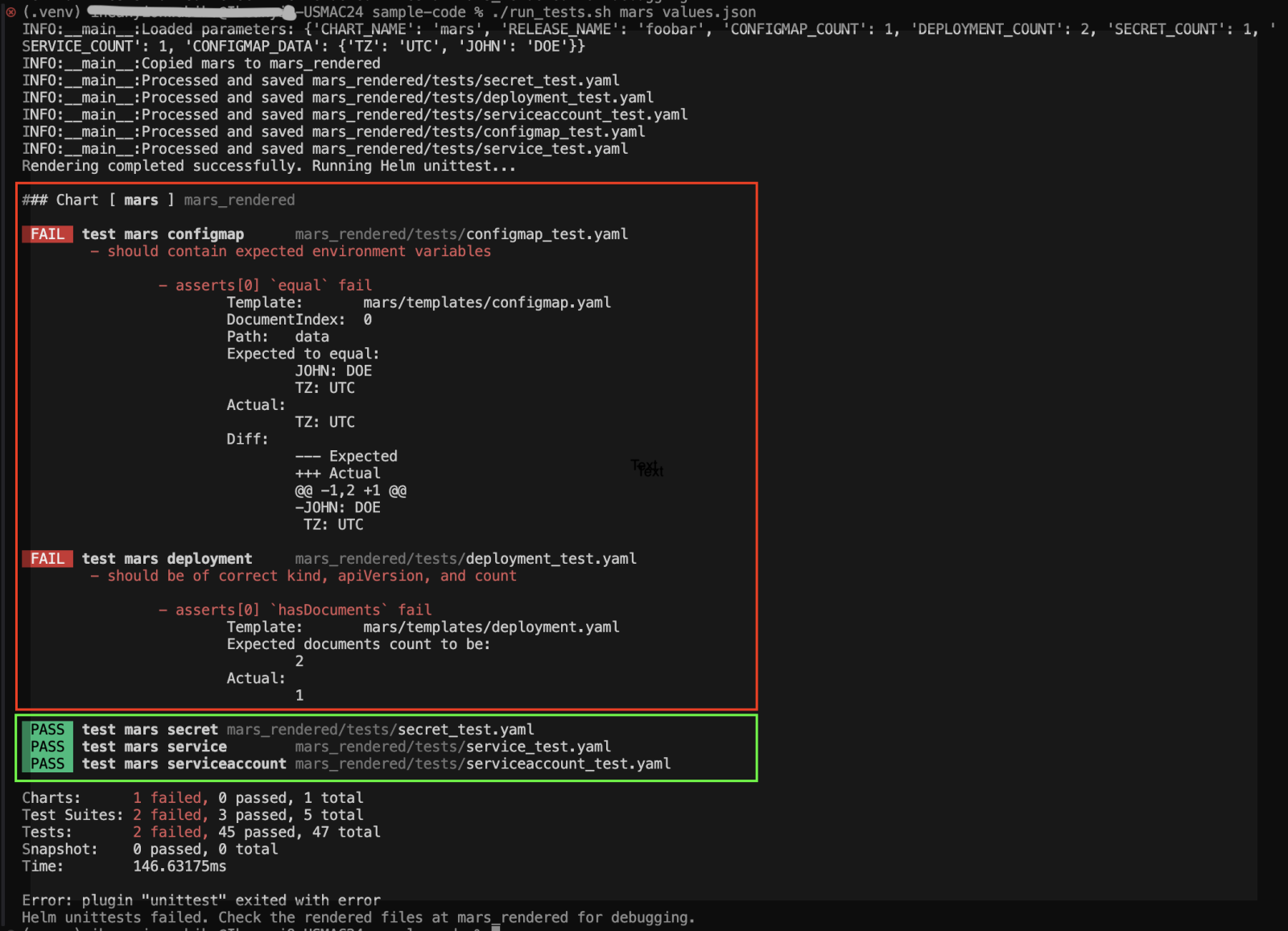

Fig 1: Test result showing failed and passed tests

The above snapshot shows the results of the tests. Overall, out of 47 total tests across five test suites, 45 passed and two failed, with the entire process being completed in about 146 milliseconds. Scrutinising the test suites in the mars_rendered directory allows you to compare the rendered test expectations against the actual chart output, helping pinpoint the exact source of the discrepancy.

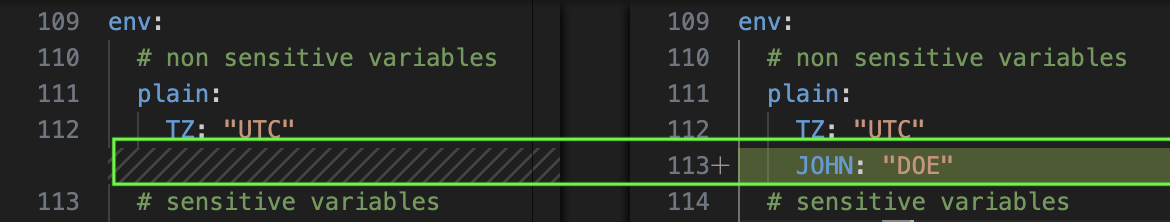

To resolve the ConfigMap test failure, trace the issue by examining the rendered ConfigMap in mars_rendered/templates/configmap.yaml. Compare this with the test expectations in marsrendered/tests/configmaptest.yaml. This investigation will lead you to the env section in the chart's values.yaml file, where you'll need to add the missing JOHN: "DOE" entry under the “plain” subsection to match the test's expectations. This adjustment will ensure the ConfigMap test passes by providing all expected environment variables.

Fig 2: Resolving the ConfigMap data

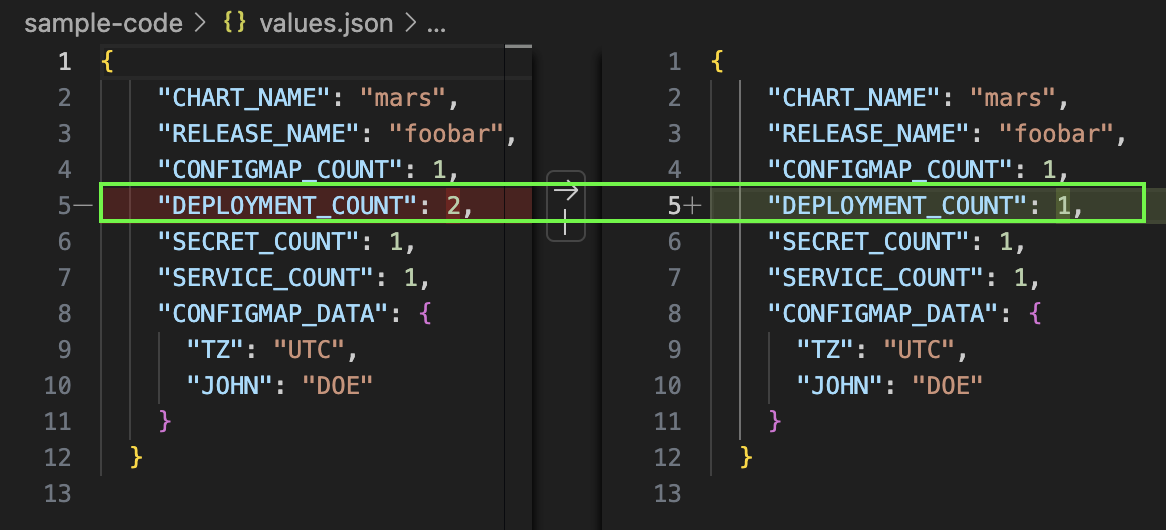

The deployment test failed — it expected two deployment documents but found only one. This could indicate an issue with the chart's deployment configuration or a mismatch between the expected and actual number of deployments. To address it, we'll modify the values.json file to set DEPLOYMENT_COUNT to 1, aligning with the actual number of deployments in our chart. This change will resolve the deployment test failure.

Fig 3: Resolving the deployment count

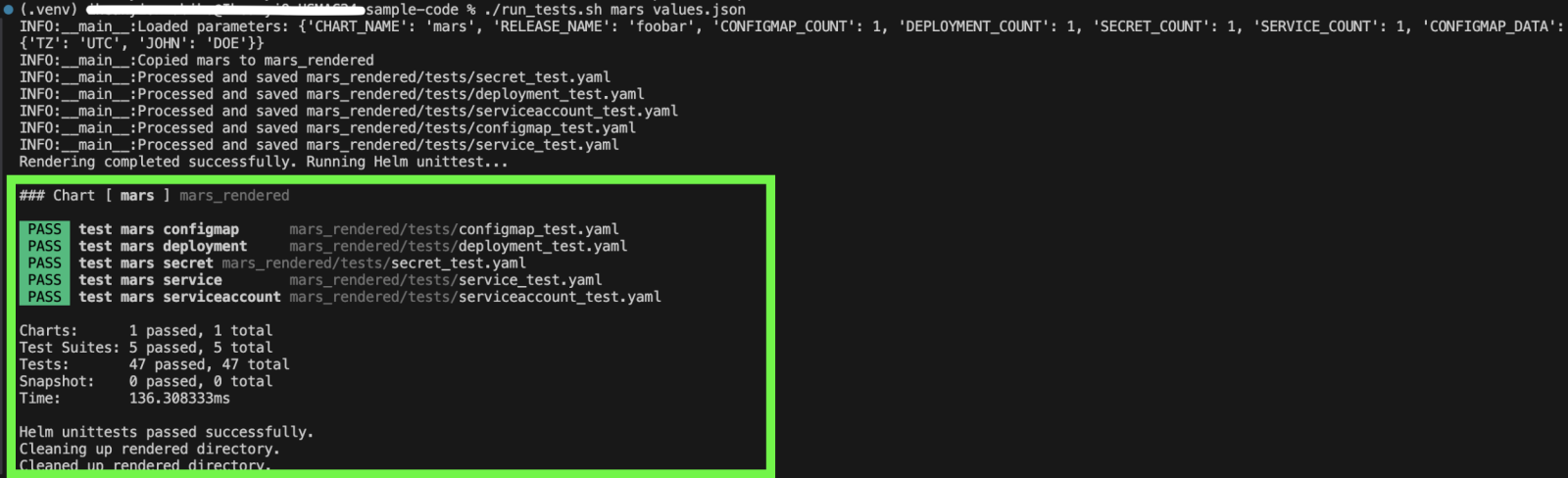

After making these changes, we re-run the test script (./run_tests.sh mars values.json) to verify the fixes. By aligning the test expectations with your chart's configuration, both the deployment and ConfigMap tests should pass successfully.

Fig 4: A successful test

Conclusion

DevOps teams can attain substantial efficiency gains and enhanced reliability by embracing these blueprint Helm unit test templates. Complementing policy-based testing, they validate that Helm charts generate Kubernetes resources with expected structures and properties, verifying compliance from a security and organisation perspective. The templates are easy to adapt for specific chart requirements, significantly reduce testing time through reusable templates, ensure consistency with standardised yet customisable structures and improve deployment reliability.